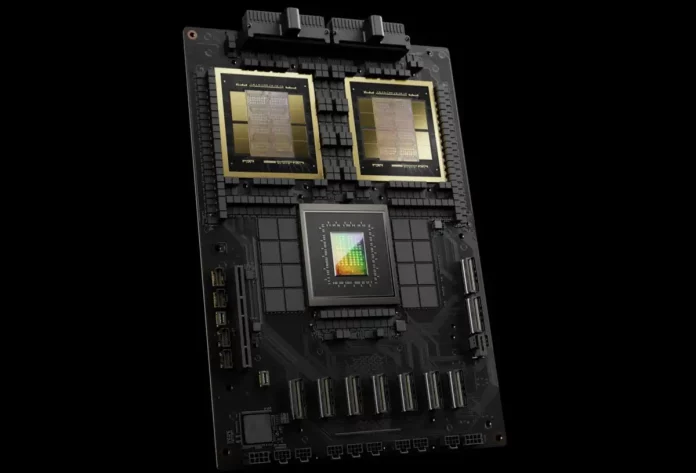

At the GTC 2024 event, NVIDIA unveiled its latest AI chip and GPU AI. The flagship chip, GB200, powers the Blackwell 200 GPU.

Specifications of NVIDIA Blackwell B200

This chip succeeds the H100 AI chip, offering significant performance and efficiency enhancements. With 208 billion transistors, the GPU Blackwell B200 delivers 20 petaflops FP4 performance.

The GB200 chip boasts 30 times the performance of the H100 in LLM inference workloads while reducing energy consumption by 25 times. In GPT-3 LLM benchmarks, the GB200 is seven times faster than the H100.

NVIDIA also highlights power efficiency, with 2,000 units of GPU Blackwell capable of training models with 1.8 trillion parameters using just 4 megawatts. This compares favorably to the previous GPU Hopper, which required 8,000 units consuming up to 15 megawatts.

The company from Taiwan has also designed a new network switch chip with 50 billion transistors, capable of handling 576 GPUs and enabling communication at speeds of 1.8 TB/s, addressing previous communication bottlenecks. Previously, a system combining 16 GPUs would spend 60% of its time on communication and 40% on computation.

The GPU comes with a comprehensive solution for enterprises, including a cooling system with NVL72 allowing 36 CPUs and 72 GPUs in a single rack with liquid cooling.

Several companies like Oracle, Amazon, Google, and Microsoft have plans to integrate NVL72 racks for their cloud services. The GPU architecture used for the GPU Blackwell B200 is likely to be the foundation for the upcoming RTX 5000 series.